Tutorials

Tutorials will take place at ETH Main Building, room Audi Max (HG F 30).

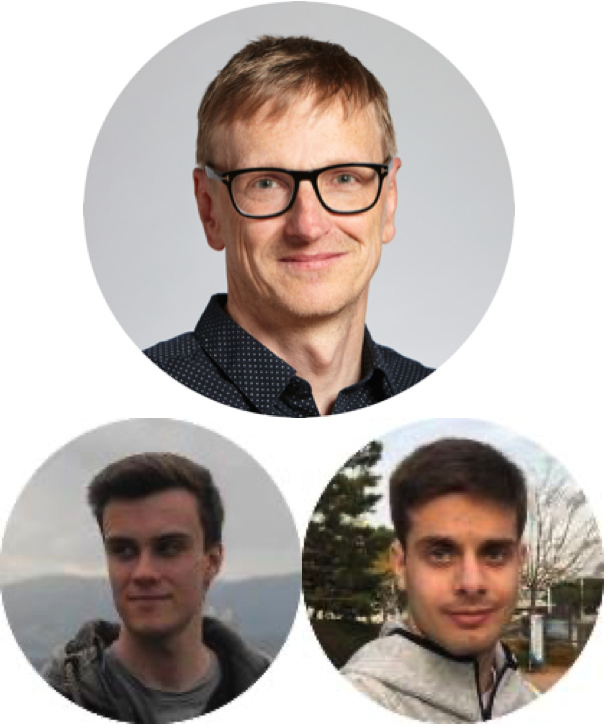

Prof. Dr. Jonas Peters

Causality: Models, Learning, and Invariance

In science, we often want to understand how a system reacts under interventions (e.g., under gene knock-out experiments or a change of policy). These questions go beyond statistical dependences and can therefore not be answered by standard regression or classification techniques. In this tutorial we will learn about the powerful language of causality and recent developments in the field. No prior knowledge about causality is required.

More precisely, we introduce structural causal models and formalize interventional distributions. We define causal effects and show how to compute them if the causal structure is known. We discuss assumptions under which causal structure becomes identifiable from observational (and interventional) data and describe corresponding methodology. If time allows, we present connections between causality and distributional robustness.

Biography: Jonas is a professor in statistics at the Department of Mathematical Sciences at the University of Copenhagen. Previously, he has worked at the Max-Planck-Institute for Intelligent Systems in Tuebingen and at the Seminar for Statistics, ETH Zurich. He studied Mathematics at the University of Heidelberg and the University of Cambridge. In his research, Jonas aims to infer causal relationships from different types of data and to build statistical methods that are robust with respect to distributional shifts. He seeks to combine theory, methodology, and applications (for example, in Earth system science and biology). His methodological work relates to areas such as computational statistics, causal inference, graphical models, high-dimensional statistics, and statistical testing. Jonas has received several awards, such as the Guy Medal in Bronze, the Silver Medal of the Royal Danish Academy of Sciences and Letters, and the ASA Causality in Statistics Education Award. Since 2021, he is a member of the COPSS Leadership academy.

Prof. Dr. Roger Wattenhofer

Florian Grötschla, Joël Mathys

Graph Neural Networks

Deep learning traditionally deals with structured and ordered inputs: simple scalars, vectors, matrices (images, temporal data), tensors (videos). But some of the world’s most interesting data is best represented by graphs. So it would be great to be able to perform deep learning on graph-structured inputs as well. This is an area which has only recently started developing, mostly in the form of graph neural networks (GNNs).

In the first part of this tutorial we will discuss the fundamentals of GNNs. How do GNNs work, and what are the main challenges when we deal with graphical inputs. What are oversmoothing, underreaching, or oversquashing, and why do they bother a GNN? What are the connections of GNNs to distributed algorithms and the graph isomorphism problem? Why are GNNs good to discuss extrapolation, and other grand challenges in deep learning? And what are some of the most recent developments in GNN research?

The second part of the tutorial will be hands-on. You get to know the PyTorch Geometric library, and you will use that library to implement your first GNN and learn how to customize them. To take part in the practical session, a laptop with internet access is enough. We prepared a few challenges on Colab and Kaggle for you to solve, in particular you will try to find your way in a maze.

Here's the notebook for the practical part of the tutorial.

Biographies: Roger Wattenhofer is a professor at ETH Zurich. He also worked some years at Microsoft Research, Brown University, and Macquarie University. His work received multiple awards, e.g. the Prize for Innovation in Distributed Computing for his work in Distributed Approximation. He published the book “Blockchain Science: Distributed Ledger Technology“, which has been translated to Chinese, Korean, and Vietnamese.

Florian Grötschla and Joël Mathys are PhD students at ETH Zurich, and experts on GNNs. Florian and Joël will organize the hands-on part of the tutorial. Joël completed his studies at ETH Zurich, he is interested in combining classical graph theory and logic with machine learning. Florian studied at Karlsruhe Institute of Technology and is now exploring the power and limitations of GNNs and how to make them generalize better to bigger instances.